Troubleshooting Azure Cosmos DB Performance

In this lab, you will use the .NET SDK to tune Azure Cosmos DB requests to optimize the performance and cost of your application.

If this is your first lab and you have not already completed the setup for the lab content see the instructions for Account Setup before starting this lab.

Create a .NET Core Project

-

On your local machine, locate the CosmosLabs folder in your Documents folder and open the

Lab09folder that will be used to contain the content of your .NET Core project. -

In the

Lab09folder, right-click the folder and select the Open with Code menu option.Alternatively, you can run a terminal in your current directory and execute the

code .command. -

In the Visual Studio Code window that appears, right-click the Explorer pane and select the Open in Terminal menu option.

-

In the terminal pane, enter and execute the following command:

dotnet restoreThis command will restore all packages specified as dependencies in the project.

-

In the terminal pane, enter and execute the following command:

dotnet buildThis command will build the project.

- In the Explorer pane, select the DataTypes.cs

-

Review the file, notice it contains the data classes you will be working with in the following steps.

-

Select the Program.cs link in the Explorer pane to open the file in the editor.

-

For the

_endpointUrivariable, replace the placeholder value with the URI value and for the_primaryKeyvariable, replace the placeholder value with the PRIMARY KEY value from your Azure Cosmos DB account. Use these instructions to get these values if you do not already have them:- For example, if your uri is

https://cosmosacct.documents.azure.com:443/, your new variable assignment will look like this:

private static readonly string _endpointUri = "https://cosmosacct.documents.azure.com:443/";- For example, if your primary key is

elzirrKCnXlacvh1CRAnQdYVbVLspmYHQyYrhx0PltHi8wn5lHVHFnd1Xm3ad5cn4TUcH4U0MSeHsVykkFPHpQ==, your new variable assignment will look like this:

private static readonly string _primaryKey = "elzirrKCnXlacvh1CRAnQdYVbVLspmYHQyYrhx0PltHi8wn5lHVHFnd1Xm3ad5cn4TUcH4U0MSeHsVykkFPHpQ=="; - For example, if your uri is

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet build

Examining Response Headers

Azure Cosmos DB returns various response headers that can give you more metadata about your request and what operations occurred on the server-side. The .NET SDK exposes many of these headers to you as properties of the ResourceResponse<> class.

Observe RU Charge for Large Item

-

Locate the following code within the

Mainmethod:Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); -

After the last line of code, add a new line of code to call a new function we will create in the next step:

await CreateMember(peopleContainer); -

Next create a new function that creates a new object and stores it in a variable named

member:private static async Task CreateMember(Container peopleContainer) { object member = new Member { accountHolder = new Bogus.Person() }; }The Bogus library has a special helper class (

Bogus.Person) that will generate a fictional person with randomized properties. Here’s an example of a fictional person JSON document:{ "Gender": 1, "FirstName": "Rosalie", "LastName": "Dach", "FullName": "Rosalie Dach", "UserName": "Rosalie_Dach", "Avatar": "https://s3.amazonaws.com/uifaces/faces/twitter/mastermindesign/128.jpg", "Email": "Rosalie27@gmail.com", "DateOfBirth": "1962-02-22T21:48:51.9514906-05:00", "Address": { "Street": "79569 Wilton Trail", "Suite": "Suite 183", "City": "Caramouth", "ZipCode": "85941-7829", "Geo": { "Lat": -62.1607, "Lng": -123.9278 } }, "Phone": "303.318.0433 x5168", "Website": "gerhard.com", "Company": { "Name": "Mertz - Gibson", "CatchPhrase": "Focused even-keeled policy", "Bs": "architect mission-critical markets" } } -

Add a new line of code to invoke the CreateItemAsync method of the Container instance using the member variable as a parameter:

ItemResponse<object> response = await peopleContainer.CreateItemAsync(member); -

After the last line of code in the using block, add a new line of code to print out the value of the RequestCharge property of the ItemResponse<> instance and return the RequestCharge (we will use this value in a later exercise):

await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); return response.RequestCharge; -

The

MainandCreateMembermethods should now look like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); await CreateMember(peopleContainer); } private static async Task<double> CreateMember(Container peopleContainer) { object member = new Member { accountHolder = new Bogus.Person() }; ItemResponse<object> response = await peopleContainer.CreateItemAsync(member); await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); return response.RequestCharge; } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the results of the console project. You should see the document creation operation use approximately

15 RU/s. -

Return to the Azure Portal (http://portal.azure.com).

-

On the left side of the portal, select the Resource groups link.

-

In the Resource groups blade, locate and select the cosmoslab resource group.

-

In the cosmoslab blade, select the Azure Cosmos DB account you recently created.

-

In the Azure Cosmos DB blade, locate and select the Data Explorer link on the left side of the blade.

-

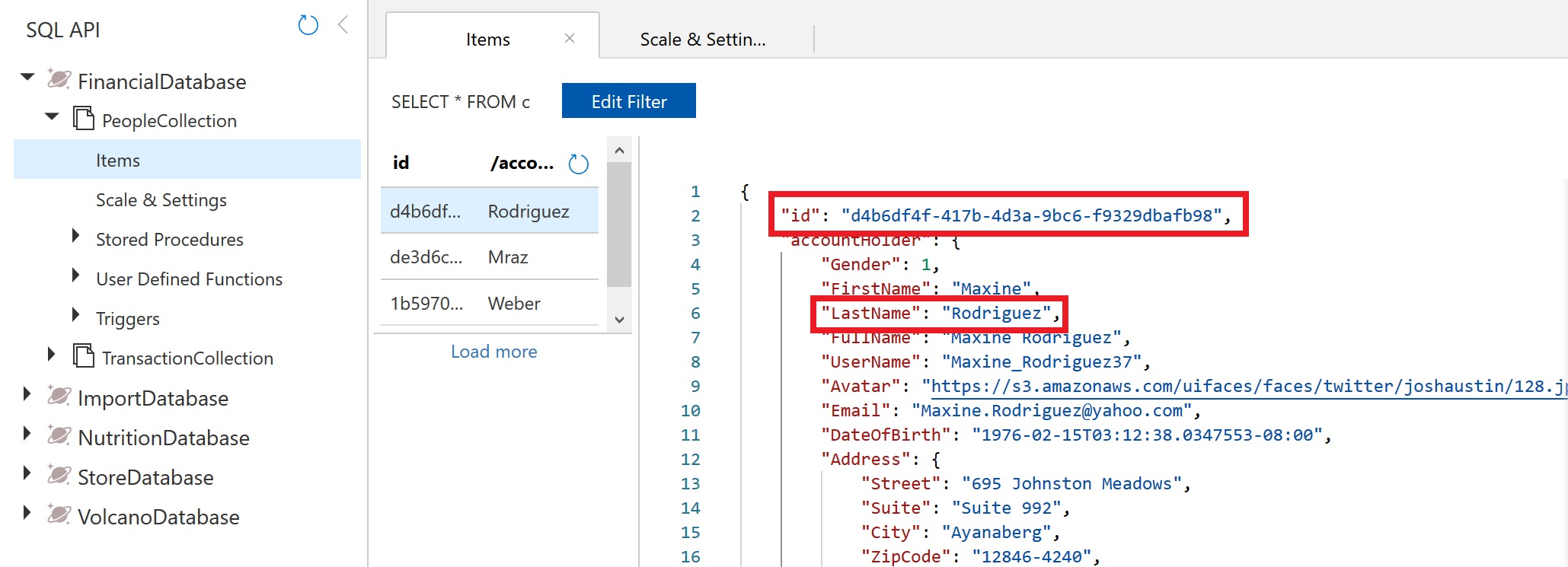

In the Data Explorer section, expand the FinancialDatabase database node and then select the PeopleCollection node.

-

Select the New SQL Query button at the top of the Data Explorer section.

-

In the query tab, notice the following SQL query.

SELECT * FROM c -

Select the Execute Query button in the query tab to run the query.

-

In the Results pane, observe the results of your query. Click Query Stats you should see an execution cost of ~3 RU/s.

-

Return to the currently open Visual Studio Code editor containing your .NET Core project.

-

In the Visual Studio Code window, select the Program.cs file to open an editor tab for the file.

-

To view the RU charge for inserting a very large document, we will use the Bogus library to create a fictional family on our Member object. To create a fictional family, we will generate a spouse and an array of 4 fictional children:

{ "accountHolder": { ... }, "relatives": { "spouse": { ... }, "children": [ { ... }, { ... }, { ... }, { ... } ] } }Each property will have a Bogus-generated fictional person. This should create a large JSON document that we can use to observe RU charges.

-

Within the Program.cs editor tab, locate the

CreateMembermethod. -

Within the

CreateMembermethod, locate the following line of code:object member = new Member { accountHolder = new Bogus.Person() };Replace that line of code with the following code:

object member = new Member { accountHolder = new Bogus.Person(), relatives = new Family { spouse = new Bogus.Person(), children = Enumerable.Range(0, 4).Select(r => new Bogus.Person()) } };This new block of code will create the large JSON object discussed above.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the results of the console project. You should see this new operation require far more RU/s than the simple JSON document at ~50 RU/s (last one was ~15 RU/s).

-

In the Data Explorer section, expand the FinancialDatabase database node and then select the PeopleCollection node.

-

Select the New SQL Query button at the top of the Data Explorer section.

-

In the query tab, replace the contents of the query editor with the following SQL query. This query will return the only item in your container with a property named Children:

SELECT * FROM c WHERE IS_DEFINED(c.relatives) -

Select the Execute Query button in the query tab to run the query.

-

In the Results pane, observe the results of your query, you should see more data returned and a slightly higher RU cost.

Tune Index Policy

-

In the Data Explorer section, expand the FinancialDatabase database node, expand the PeopleCollection node, and then select the Scale & Settings option.

-

In the Settings section, locate the Indexing Policy field and observe the current default indexing policy:

{ "indexingMode": "consistent", "automatic": true, "includedPaths": [ { "path": "/*" } ], "excludedPaths": [ { "path": "/\"_etag\"/?" } ], "spatialIndexes": [ { "path": "/*", "types": [ "Point", "LineString", "Polygon", "MultiPolygon" ] } ] }This policy will index all paths in your JSON document, except for _etag which is never used in queries. This policy will also index spatial data.

-

Replace the indexing policy with a new policy. This new policy will exclude the

/relatives/*path from indexing effectively removing the Children property of your large JSON document from the index:{ "indexingMode": "consistent", "automatic": true, "includedPaths": [ { "path": "/*" } ], "excludedPaths": [ { "path": "/\"_etag\"/?" }, { "path":"/relatives/*" } ], "spatialIndexes": [ { "path": "/*", "types": [ "Point", "LineString", "Polygon", "MultiPolygon" ] } ] } -

Select the Save button at the top of the section to persist your new indexing policy.

-

Select the New SQL Query button at the top of the Data Explorer section.

-

In the query tab, replace the contents of the query editor with the following SQL query:

SELECT * FROM c WHERE IS_DEFINED(c.relatives) -

Select the Execute Query button in the query tab to run the query.

You will see immediately that you can still determine if the /relatives path is defined.

-

In the query tab, replace the contents of the query editor with the following SQL query:

SELECT * FROM c WHERE IS_DEFINED(c.relatives) ORDER BY c.relatives.Spouse.FirstName -

Select the Execute Query button in the query tab to run the query.

This query will fail immediately since this property is not indexed. Keep in mind when defining indexes that only indexed properties can be used in query conditions.

-

Return to the currently open Visual Studio Code editor containing your .NET Core project.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the results of the console project. You should see a difference in the number of RU/s (~26 RU/s vs ~48 RU/s previously) required to create this item. This is due to the indexer skipping the paths you excluded.

Troubleshooting Requests

First, you will use the .NET SDK to issue request beyond the assigned capacity for a container. Request unit consumption is evaluated at a per-second rate. For applications that exceed the provisioned request unit rate, requests are rate-limited until the rate drops below the provisioned throughput level. When a request is rate-limited, the server preemptively ends the request with an HTTP status code of 429 RequestRateTooLargeException and returns the x-ms-retry-after-ms header. The header indicates the amount of time, in milliseconds, that the client must wait before retrying the request. You will observe the rate-limiting of your requests in an example application.

Reducing R/U Throughput for a Container

-

In the Data Explorer section, expand the FinancialDatabase database node, expand the TransactionCollection node, and then select the Scale & Settings option.

-

In the Settings section, locate the Throughput field and update it’s value to 400.

This is the minimum throughput that you can allocate to a container.

-

Select the Save button at the top of the section to persist your new throughput allocation.

Observing Throttling (HTTP 429)

-

Return to the currently open Visual Studio Code editor containing your .NET Core project.

-

Select the Program.cs link in the Explorer pane to open the file in the editor.

-

Locate the

await CreateMember(peopleContainer)line within theMainmethod. Comment out this line and add a new line below so it looks like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); await CreateTransactions(transactionContainer); } -

Below the

CreateMembermethod create a new methodCreateTransactions:private static async Task CreateTransactions(Container transactionContainer) { } -

Add the following code to create a collection of

Transactioninstances:var transactions = new Bogus.Faker<Transaction>() .RuleFor(t => t.id, (fake) => Guid.NewGuid().ToString()) .RuleFor(t => t.amount, (fake) => Math.Round(fake.Random.Double(5, 500), 2)) .RuleFor(t => t.processed, (fake) => fake.Random.Bool(0.6f)) .RuleFor(t => t.paidBy, (fake) => $"{fake.Name.FirstName().ToLower()}.{fake.Name.LastName().ToLower()}") .RuleFor(t => t.costCenter, (fake) => fake.Commerce.Department(1).ToLower()) .GenerateLazy(100); -

Add the following foreach block to iterate over the

Transactioninstances:foreach(var transaction in transactions) { } -

Within the

foreachblock, add the following line of code to asynchronously create an item and save the result of the creation task to a variable:ItemResponse<Transaction> result = await transactionContainer.CreateItemAsync(transaction);The

CreateItemAsyncmethod of theContainerclass takes in an object that you would like to serialize into JSON and store as an item within the specified collection. -

Still within the

foreachblock, add the following line of code to write the value of the newly created resource’sidproperty to the console:await Console.Out.WriteLineAsync($"Item Created\t{result.Resource.id}");The

ItemResponsetype has a property namedResourcethat can give you access to the item instance resulting from the operation. -

Your

CreateTransactionsmethod should look like this:private static async Task CreateTransactions(Container transactionContainer) { var transactions = new Bogus.Faker<Transaction>() .RuleFor(t => t.id, (fake) => Guid.NewGuid().ToString()) .RuleFor(t => t.amount, (fake) => Math.Round(fake.Random.Double(5, 500), 2)) .RuleFor(t => t.processed, (fake) => fake.Random.Bool(0.6f)) .RuleFor(t => t.paidBy, (fake) => $"{fake.Name.FirstName().ToLower()}.{fake.Name.LastName().ToLower()}") .RuleFor(t => t.costCenter, (fake) => fake.Commerce.Department(1).ToLower()) .GenerateLazy(100); foreach(var transaction in transactions) { ItemResponse<Transaction> result = await transactionContainer.CreateItemAsync(transaction); await Console.Out.WriteLineAsync($"Item Created\t{result.Resource.id}"); } }As a reminder, the Bogus library generates a set of test data. In this example, you are creating 100 items using the Bogus library and the rules listed above. The GenerateLazy method tells the Bogus library to prepare for a request of 100 items by returning a variable of type

IEnumerable<Transaction>. Since LINQ uses deferred execution by default, the items aren’t actually created until the collection is iterated. The foreach loop at the end of this code block iterates over the collection and creates items in Azure Cosmos DB. -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application. You should see a list of item ids associated with new items that are being created by this tool.

-

Back in the code editor tab, locate the following lines of code:

foreach (var transaction in transactions) { ItemResponse<Transaction> result = await transactionContainer.CreateItemAsync(transaction); await Console.Out.WriteLineAsync($"Item Created\t{result.Resource.id}"); }Replace those lines of code with the following code:

List<Task<ItemResponse<Transaction>>> tasks = new List<Task<ItemResponse<Transaction>>>(); foreach (var transaction in transactions) { Task<ItemResponse<Transaction>> resultTask = transactionContainer.CreateItemAsync(transaction); tasks.Add(resultTask); } Task.WaitAll(tasks.ToArray()); foreach (var task in tasks) { await Console.Out.WriteLineAsync($"Item Created\t{task.Result.Resource.id}"); }We are going to attempt to run as many of these creation tasks in parallel as possible. Remember, our container is configured at the minimum of 400 RU/s.

-

Your

CreateTransactionsmethod should look like this:private static async Task CreateTransactions(Container transactionContainer) { var transactions = new Bogus.Faker<Transaction>() .RuleFor(t => t.id, (fake) => Guid.NewGuid().ToString()) .RuleFor(t => t.amount, (fake) => Math.Round(fake.Random.Double(5, 500), 2)) .RuleFor(t => t.processed, (fake) => fake.Random.Bool(0.6f)) .RuleFor(t => t.paidBy, (fake) => $"{fake.Name.FirstName().ToLower()}.{fake.Name.LastName().ToLower()}") .RuleFor(t => t.costCenter, (fake) => fake.Commerce.Department(1).ToLower()) .GenerateLazy(100); List<Task<ItemResponse<Transaction>>> tasks = new List<Task<ItemResponse<Transaction>>>(); foreach (var transaction in transactions) { Task<ItemResponse<Transaction>> resultTask = transactionContainer.CreateItemAsync(transaction); tasks.Add(resultTask); } Task.WaitAll(tasks.ToArray()); foreach (var task in tasks) { await Console.Out.WriteLineAsync($"Item Created\t{task.Result.Resource.id}"); } }- The first foreach loops iterates over the created transactions and creates asynchronous tasks which are stored in an

List. Each asynchronous task will issue a request to Azure Cosmos DB. These requests are issued in parallel and could generate a429 - too many requestsexception since your container does not have enough throughput provisioned to handle the volume of requests.

- The first foreach loops iterates over the created transactions and creates asynchronous tasks which are stored in an

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

This query should execute successfully. We are only creating 100 items and we most likely will not run into any throughput issues here.

-

Back in the code editor tab, locate the following line of code:

.GenerateLazy(100);Replace that line of code with the following code:

.GenerateLazy(5000);We are going to try and create 5000 items in parallel to see if we can hit out throughput limit.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe that the application will crash after some time.

This query will most likely hit our throughput limit. You will see multiple error messages indicating that specific requests have failed.

Increasing R/U Throughput to Reduce Throttling

-

Switch back to the Azure Portal, in the Data Explorer section, expand the FinancialDatabase database node, expand the TransactionCollection node, and then select the Scale & Settings option.

-

In the Settings section, locate the Throughput field and update it’s value to 10000.

-

Select the Save button at the top of the section to persist your new throughput allocation.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe that the application will complete after some time.

-

Return to the Settings section in the Azure Portal and change the Throughput value back to 400.

-

Select the Save button at the top of the section to persist your new throughput allocation.

Tuning Queries and Reads

You will now tune your requests to Azure Cosmos DB by manipulating the SQL query and properties of the RequestOptions class in the .NET SDK.

Measuring RU Charge

-

Locate the

Mainmethod to comment outCreateTransactionsand add a new lineawait QueryTransactions(transactionContainer);. The method should look like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); //await CreateTransactions(transactionContainer); await QueryTransactions(transactionContainer); } -

Create a new function

QueryTransactionsand add the following line of code that will store a SQL query in a string variable:private static async Task QueryTransactions(Container transactionContainer) { string sql = "SELECT TOP 1000 * FROM c WHERE c.processed = true ORDER BY c.amount DESC"; }This query will perform a cross-partition ORDER BY and only return the top 1000 out of 50000 items.

-

Add the following line of code to create a item query instance:

FeedIterator<Transaction> query = transactionContainer.GetItemQueryIterator<Transaction>(sql); -

Add the following line of code to get the first “page” of results:

var result = await query.ReadNextAsync();We will not enumerate the full result set. We are only interested in the metrics for the first page of results.

-

Add the following lines of code to print out the Request Charge metric for the query to the console:

await Console.Out.WriteLineAsync($"Request Charge: {result.RequestCharge} RU/s"); -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application. You should see the Request Charge metric printed out in your console window. It should be ~81 RU/s.

-

Back in the code editor tab, locate the following line of code:

string sql = "SELECT TOP 1000 * FROM c WHERE c.processed = true ORDER BY c.amount DESC";Replace that line of code with the following code:

string sql = "SELECT * FROM c WHERE c.processed = true";This new query does not perform a cross-partition ORDER BY.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application. You should see a slight reduction in both the Request Charge value to ~35 RU/s

-

Back in the code editor tab, locate the following line of code:

string sql = "SELECT * FROM c WHERE c.processed = true";Replace that line of code with the following code:

string sql = "SELECT * FROM c";This new query does not filter the result set.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application. This query should be ~21 RU/s. Observe the slight differences in the various metric values from last few queries.

-

Back in the code editor tab, locate the following line of code:

string sql = "SELECT * FROM c";Replace that line of code with the following code:

string sql = "SELECT c.id FROM c";This new query does not filter the result set, and only returns part of the documents.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application. This should be about ~22 RU/s. While the payload returned is much smaller, the RU/s is slightly higher because the query engine did work to return the specific property.

Managing SDK Query Options

-

Locate the

CreateTransactionsmethod and delete the code added for the previous section so it again looks like this:private static async Task QueryTransactions(Container transactionContainer) { } -

Add the following lines of code to create variables to configure query options:

int maxItemCount = 100; int maxDegreeOfParallelism = 1; int maxBufferedItemCount = 0; -

Add the following lines of code to configure options for a query from the variables:

QueryRequestOptions options = new QueryRequestOptions { MaxItemCount = maxItemCount, MaxBufferedItemCount = maxBufferedItemCount, MaxConcurrency = maxDegreeOfParallelism }; -

Add the following lines of code to write various values to the console window:

await Console.Out.WriteLineAsync($"MaxItemCount:\t{maxItemCount}"); await Console.Out.WriteLineAsync($"MaxDegreeOfParallelism:\t{maxDegreeOfParallelism}"); await Console.Out.WriteLineAsync($"MaxBufferedItemCount:\t{maxBufferedItemCount}"); -

Add the following line of code that will store a SQL query in a string variable:

string sql = "SELECT * FROM c WHERE c.processed = true ORDER BY c.amount DESC";This query will perform a cross-partition ORDER BY on a filtered result set.

-

Add the following line of code to create and start new a high-precision timer:

Stopwatch timer = Stopwatch.StartNew(); -

Add the following line of code to create a item query instance:

FeedIterator<Transaction> query = transactionContainer.GetItemQueryIterator<Transaction>(sql, requestOptions: options); -

Add the following lines of code to enumerate the result set.

while (query.HasMoreResults) { var result = await query.ReadNextAsync(); }Since the results are paged, we will need to call the

ReadNextAsyncmethod multiple times in a while loop. -

Add the following line of code stop the timer:

timer.Stop(); -

Add the following line of code to write the timer’s results to the console window:

await Console.Out.WriteLineAsync($"Elapsed Time:\t{timer.Elapsed.TotalSeconds}"); -

The

QueryTransactionsmethod should now look like this:private static async Task QueryTransactions(Container transactionContainer) { int maxItemCount = 100; int maxDegreeOfParallelism = 1; int maxBufferedItemCount = 0; QueryRequestOptions options = new QueryRequestOptions { MaxItemCount = maxItemCount, MaxBufferedItemCount = maxBufferedItemCount, MaxConcurrency = maxDegreeOfParallelism }; await Console.Out.WriteLineAsync($"MaxItemCount:\t{maxItemCount}"); await Console.Out.WriteLineAsync($"MaxDegreeOfParallelism:\t{maxDegreeOfParallelism}"); await Console.Out.WriteLineAsync($"MaxBufferedItemCount:\t{maxBufferedItemCount}"); string sql = "SELECT * FROM c WHERE c.processed = true ORDER BY c.amount DESC"; Stopwatch timer = Stopwatch.StartNew(); FeedIterator<Transaction> query = transactionContainer.GetItemQueryIterator<Transaction>(sql, requestOptions: options); while (query.HasMoreResults) { var result = await query.ReadNextAsync(); } timer.Stop(); await Console.Out.WriteLineAsync($"Elapsed Time:\t{timer.Elapsed.TotalSeconds}"); } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

This initial query should take an unexpectedly long amount of time. This will require us to optimize our SDK options.

-

Back in the code editor tab, locate the following line of code:

int maxDegreeOfParallelism = 1;Replace that line of code with the following:

int maxDegreeOfParallelism = 5;Setting the

maxDegreeOfParallelismquery parameter to a value of1effectively eliminates parallelism. Here we “bump up” the parallelism to a value of5. -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You should see a very slight positive impact considering you now have some form of parallelism.

-

Back in the code editor tab, locate the following line of code:

int maxBufferedItemCount = 0;Replace that line of code with the following code:

int maxBufferedItemCount = -1;Setting the

MaxBufferedItemCountproperty to a value of-1effectively tells the SDK to manage this setting. -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

Again, this should have a slight positive impact on your performance time.

-

Back in the code editor tab, locate the following line of code:

int maxDegreeOfParallelism = 5;Replace that line of code with the following code:

int maxDegreeOfParallelism = -1;Parallel query works by querying multiple partitions in parallel. However, data from an individual partitioned container is fetched serially with respect to the query setting the

maxBufferedItemCountproperty to a value of-1effectively tells the SDK to manage this setting. Setting the MaxDegreeOfParallelism to the number of partitions has the maximum chance of achieving the most performant query, provided all other system conditions remain the same. -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

Again, this should have a slight impact on your performance time.

-

Back in the code editor tab, locate the following line of code:

int maxItemCount = 100;Replace that line of code with the following code:

int maxItemCount = 500;We are increasing the amount of items returned per “page” in an attempt to improve the performance of the query.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You will notice that the query performance improved dramatically. This may be an indicator that our query was bottlenecked by the client computer.

-

Back in the code editor tab, locate the following line of code:

int maxItemCount = 500;Replace that line of code with the following code:

int maxItemCount = 1000;For large queries, it is recommended that you increase the page size up to a value of 1000.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

By increasing the page size, you have sped up the query even more.

-

Back in the code editor tab, locate the following line of code:

int maxBufferedItemCount = -1;Replace that line of code with the following code:

int maxBufferedItemCount = 50000;Parallel query is designed to pre-fetch results while the current batch of results is being processed by the client. The pre-fetching helps in overall latency improvement of a query. MaxBufferedItemCount is the parameter to limit the number of pre-fetched results. Setting MaxBufferedItemCount to the expected number of results returned (or a higher number) allows the query to receive maximum benefit from pre-fetching.

-

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

In most cases, this change will decrease your query time by a small amount.

Reading and Querying Items

-

In the Azure Cosmos DB blade, locate and select the Data Explorer link on the left side of the blade.

-

In the Data Explorer section, expand the FinancialDatabase database node, expand the PeopleCollection node, and then select the Items option.

-

Take note of the id property value of any document as well as that document’s partition key.

-

Locate the

Mainmethod and comment the last line and add a new lineawait QueryMember(peopleContainer);so it looks like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); //await CreateTransactions(transactionContainer); //await QueryTransactions(transactionContainer); await QueryMember(peopleContainer); } -

Below the

QueryTransactionsmethod create a new method that looks like this:private static async Task QueryMember(Container peopleContainer) { } -

Add the following line of code that will store a SQL query in a string variable (replacing example.document with the id value that you noted earlier):

string sql = "SELECT TOP 1 * FROM c WHERE c.id = 'example.document'";This query will find a single item matching the specified unique id.

-

Add the following line of code to create a item query instance:

FeedIterator<object> query = peopleContainer.GetItemQueryIterator<object>(sql); -

Add the following line of code to get the first page of results and then store them in a variable of type FeedResponse<>:

FeedResponse<object> response = await query.ReadNextAsync();We only need to retrieve a single page since we are getting the

TOP 1items from the . -

Add the following lines of code to print out the value of the RequestCharge property of the FeedResponse<> instance and then the content of the retrieved item:

await Console.Out.WriteLineAsync($"{response.Resource.First()}"); await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); -

The method should look like this:

private static async Task QueryMember(Container peopleContainer) { string sql = "SELECT TOP 1 * FROM c WHERE c.id = '372a9e8e-da22-4f7a-aff8-3a86f31b2934'"; FeedIterator<object> query = peopleContainer.GetItemQueryIterator<object>(sql); FeedResponse<object> response = await query.ReadNextAsync(); await Console.Out.WriteLineAsync($"{response.Resource.First()}"); await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You should see the amount of ~ 3 RU/s used to query for the item. Make note of the LastName object property value as you will use it in the next step.

-

Locate the

Mainmethod and comment the last line and add a new lineawait ReadMember(peopleContainer);so it looks like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); //await CreateTransactions(transactionContainer); //await QueryTransactions(transactionContainer); //await QueryMember(peopleContainer); await ReadMember(peopleContainer); } -

Below the

QueryMembermethod create a new method that looks like this:private static async Task<double> ReadMember(Container peopleContainer) { } -

Add the following code to use the

ReadItemAsyncmethod of theContainerclass to retrieve an item using the unique id and the partition key set to the last name from the previous step. Replace theexample.documentand<Last Name>tokens:ItemResponse<object> response = await peopleContainer.ReadItemAsync<object>("example.document", new PartitionKey("<Last Name>")); -

Add the following line of code to print out the value of the RequestCharge property of the

ItemResponse<T>instance and return the Request Charge (we will use this value in a later exercise):await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); return response.RequestCharge; -

The method should now look similar to this:

private static async Task<double> ReadMember(Container peopleContainer) { ItemResponse<object> response = await peopleContainer.ReadItemAsync<object>("372a9e8e-da22-4f7a-aff8-3a86f31b2934", new PartitionKey("Batz")); await Console.Out.WriteLineAsync($"{response.RequestCharge} RU/s"); return response.RequestCharge; } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You should see that it took fewer RU/s (1 RU/s vs ~3 RU/s) to obtain the item directly if you have the item’s id and partition key value. The reason why this is so efficient is that ReadItemAsync() bypasses the query engine entirely and goes directly to the backend store to retrieve the item. A read of this type for 1 KB of data or less will always cost 1 RU/s.

Setting Throughput for Expected Workloads

Using appropriate RU/s settings for container or database throughput can allow you to meet desired performance at minimal cost. Deciding on a good baseline and varying settings based on expected usage patterns are both strategies that can help.

Estimating Throughput Needs

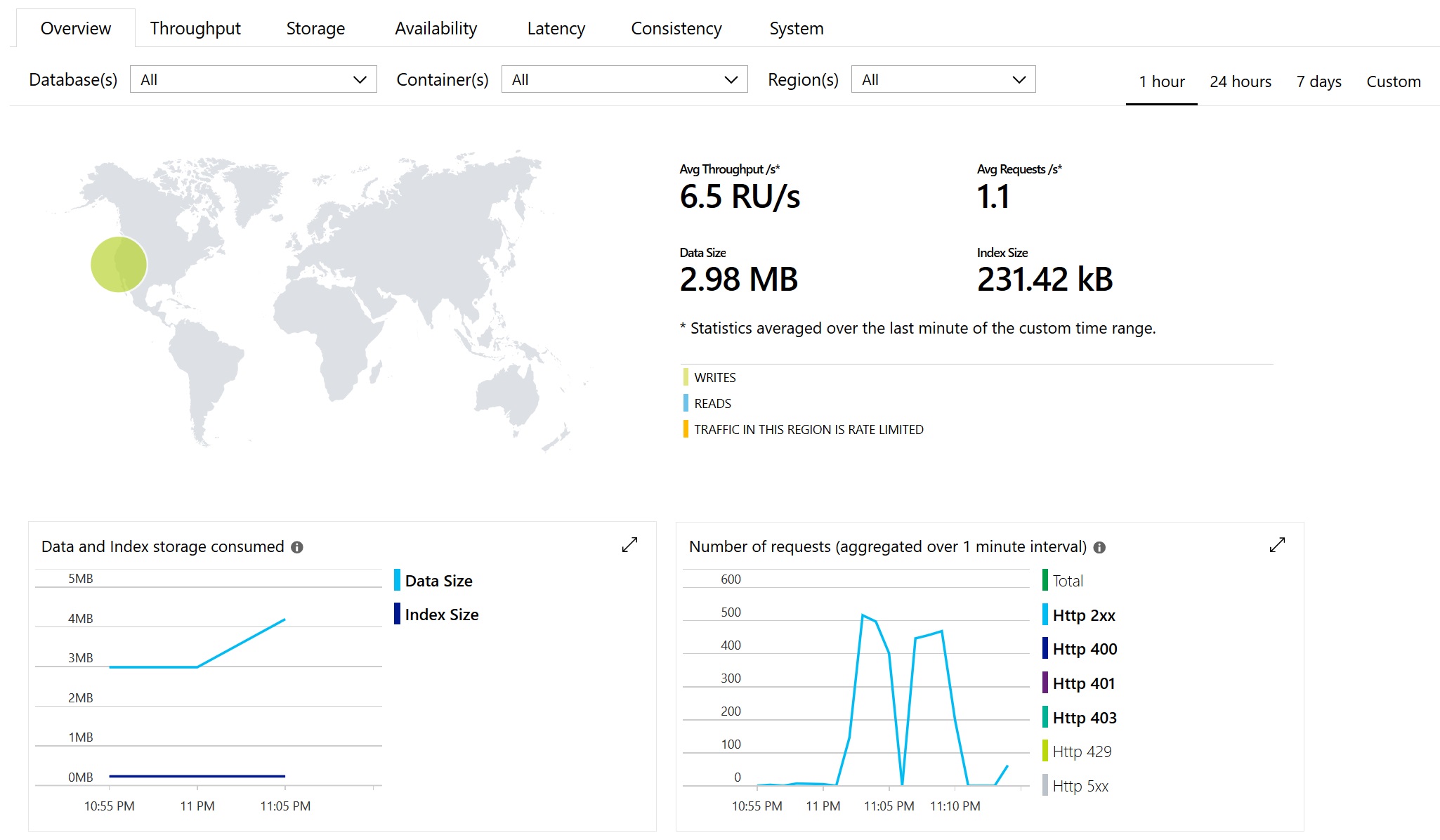

- In the Azure Cosmos DB blade, locate and select the Metrics link on the left side of the blade under the Monitoring section.

-

Observe the values in the Number of requests graph to see the volume of requests your lab work has been making to your Cosmos containers.

Various parameters can be changed to adjust the data shown in the graphs and there is also an option to export data to csv for further analysis. For an existing application this can be helpful in determining your query volume.

-

Return to the Visual Studio Code window and locate the

Mainmethod. Add a new lineawait EstimateThroughput(peopleContainer);to look like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); //await CreateTransactions(transactionContainer); //await QueryTransactions(transactionContainer); //await QueryMember(peopleContainer); //await ReadMember(peopleContainer); await EstimateThroughput(peopleContainer); } -

At the bottom of the class add a new method

EstimateThroughputwith the following code:private static async Task EstimateThroughput(Container peopleContainer) { } -

Add the following lines of code to this method. These variables represent the estimated workload for our application:

int expectedWritesPerSec = 200; int expectedReadsPerSec = 800;These types of numbers could come from planning a new application or tracking actual usage of an existing one. Details of determining workload are outside the scope of this lab.

-

Next add the following lines of code call

CreateMemberandReadMemberand capture the Request Charge returned from them. These variables represent the actual cost of these operations for our application:double writeCost = await CreateMember(peopleContainer); double readCost = await ReadMember(peopleContainer); -

Add the following line of code as the last line of this method to print out the estimated throughput needs of our application based on our test queries:

await Console.Out.WriteLineAsync($"Estimated load: {writeCost * expectedWritesPerSec + readCost * expectedReadsPerSec} RU/s"); -

The

EstimateThroughputmethod should now look like this:private static async Task EstimateThroughput(Container peopleContainer) { int expectedWritesPerSec = 200; int expectedReadsPerSec = 800; double writeCost = await CreateMember(peopleContainer); double readCost = await ReadMember(peopleContainer); await Console.Out.WriteLineAsync($"Estimated load: {writeCost * expectedWritesPerSec + readCost * expectedReadsPerSec} RU/s"); } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You should see the total throughput needed for our application based on our estimates. This can then be used to guide how much throughput to provision for the application. To get the most accurate estimate for RU/s needs for your applications, you can follow the same pattern to estimate RU/s needs for every operation in your application multiplied by the number of those operations you expect per second. Alternatively you can use the Metrics tab in the portal to measure average throughput.

Adjusting for Usage Patterns

Many applications have workloads that vary over time in a predictable way. For example, business applications that have a heavy workload during a 9-5 business day but minimal usage outside of those hours. Cosmos throughput settings can also be varied to match this type of usage pattern.

-

Locate the

Mainmethod and comment out the last line and add a new lineawait UpdateThroughput(peopleContainer);so it looks like this:public static async Task Main(string[] args) { Database database = _client.GetDatabase(_databaseId); Container peopleContainer = database.GetContainer(_peopleContainerId); Container transactionContainer = database.GetContainer(_transactionContainerId); //await CreateMember(peopleContainer); //await CreateTransactions(transactionContainer); //await QueryTransactions(transactionContainer); //await QueryMember(peopleContainer); //await ReadMember(peopleContainer); //await EstimateThroughput(peopleContainer); await UpdateThroughput(peopleContainer); } -

At the bottom of the class create a new method

UpdateThroughput:private static async Task UpdateThroughput(Container peopleContainer) { } -

Add the following code to retrieve the current RU/sec setting for the container:

int? throughput = await peopleContainer.ReadThroughputAsync(); await Console.Out.WriteLineAsync($"{throughput} RU/s");Note that the type of the Throughput property is a nullable value. Provisioned throughput can be set either at the container or database level. If set at the database level, this property read from the Container will return null. When set at the container level, the same method on Database will return null.

-

Add the following line of code to print out the minimum throughput value for the container:

ThroughputResponse throughputResponse = await container.ReadThroughputAsync(new RequestOptions()); int? minThroughput = throughputResponse.MinThroughput; await Console.Out.WriteLineAsync($"Minimum Throughput {minThroughput} RU/s");Although the overall minimum throughput that can be set is 400 RU/s, specific containers or databases may have higher limits depending on size of stored data, previous maximum throughput settings, or number of containers in a database. Trying to set a value below the available minimum will cause an exception here. The current allowed minimum value can be found on the ThroughputResponse.MinThroughput property.

-

Add the following code to update the RU/s setting for the container then print out the updated RU/s for the container:

await peopleContainer.ReplaceThroughputAsync(1000); throughput = await peopleContainer.ReadThroughputAsync(); await Console.Out.WriteLineAsync($"New Throughput {throughput} RU/s"); -

The finished method should look like this:

private static async Task UpdateThroughput(Container peopleContainer) { int? throughput = await peopleContainer.ReadThroughputAsync(); await Console.Out.WriteLineAsync($"Current Throughput {throughput} RU/s"); ThroughputResponse throughputResponse = await container.ReadThroughputAsync(new RequestOptions()); int? minThroughput = throughputResponse.MinThroughput; await Console.Out.WriteLineAsync($"Minimum Throughput {minThroughput} RU/s"); await peopleContainer.ReplaceThroughputAsync(1000); throughput = await peopleContainer.ReadThroughputAsync(); await Console.Out.WriteLineAsync($"New Throughput {throughput} RU/s"); } -

Save all of your open editor tabs.

-

In the terminal pane, enter and execute the following command:

dotnet run -

Observe the output of the console application.

You should see the initial provisioned value before changing to 1000.

-

In the Azure Cosmos DB blade, locate and select the Data Explorer link on the left side of the blade.

-

In the Data Explorer section, expand the FinancialDatabase database node, expand the PeopleCollection node, and then select the Scale & Settings option.

-

In the Settings section, locate the Throughput field and note that is is now set to 1000.

Note that you may need to refresh the Data Explorer to see the new value.

If this is your final lab, follow the steps in Removing Lab Assets to remove all lab resources.